Defining Data Interfaces with FlinkSQL

FlinkSQL is an amazing innovation in data processing: it packages the power of realtime stream processing within the simplicity of SQL. That means you can start with the SQL you know and introduce stream processing constructs as you need them.

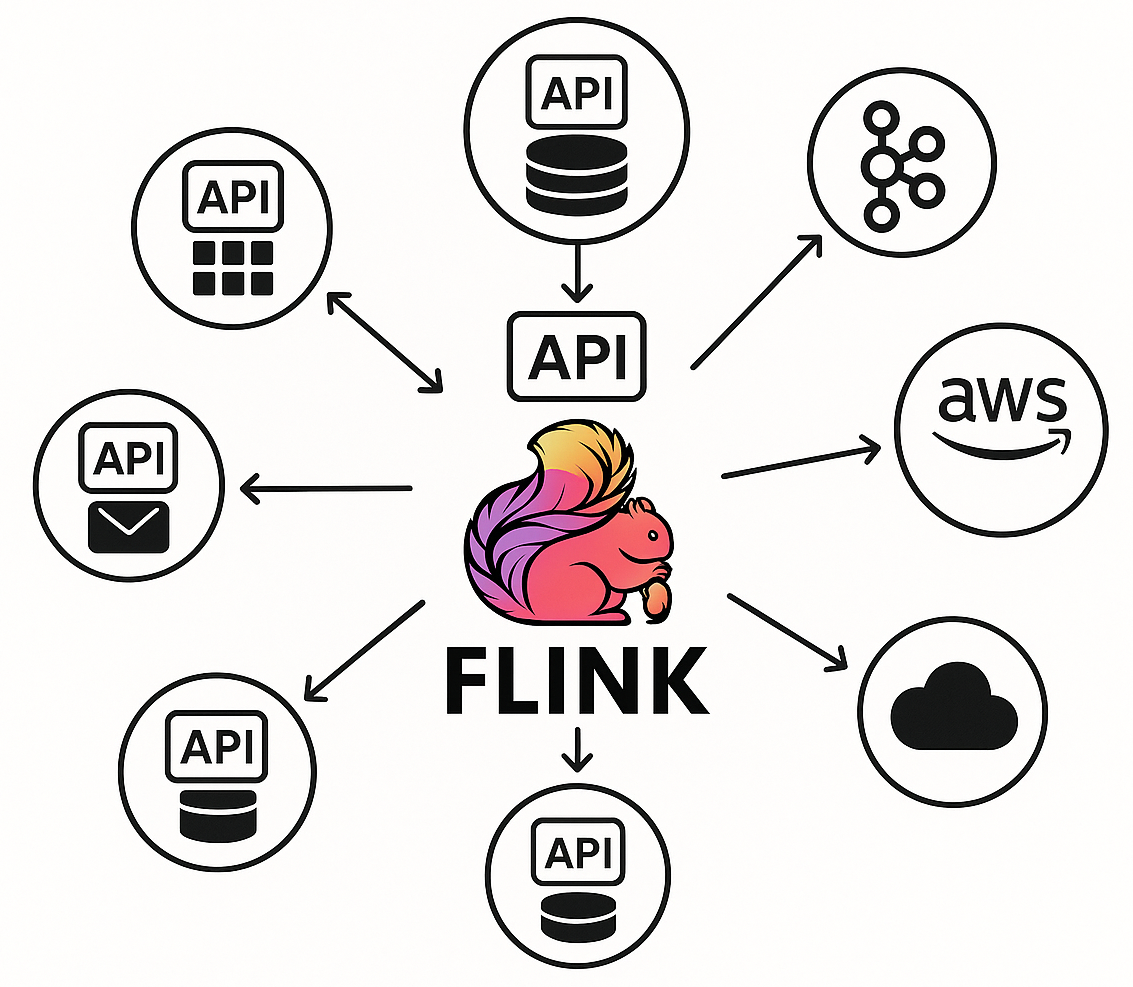

FlinkSQL adds the ability to process data incrementally to the classic set-based semantics of SQL. In addition, FlinkSQL supports source and sink connectors making it easy to ingest data from and move data to other systems. That's a powerful combination which covers a lot of data processing use cases.

In fact, it only takes a few extensions to FlinkSQL to build entire data applications. Let's see how that works.

Building Data APIs with FlinkSQL

CREATE TABLE UserTokens (

userid BIGINT NOT NULL,

tokens BIGINT NOT NULL,

request_time TIMESTAMP_LTZ(3) NOT NULL METADATA FROM 'timestamp'

);

/*+query_by_all(userid) */

TotalUserTokens := SELECT userid, sum(tokens) as total_tokens,

count(tokens) as total_requests

FROM UserTokens GROUP BY userid;

UserTokensByTime(userid BIGINT NOT NULL, fromTime TIMESTAMP NOT NULL, toTime TIMESTAMP NOT NULL):=

SELECT * FROM UserTokens WHERE userid = :userid,

request_time >= :fromTime AND request_time < :toTime ORDER BY request_time DESC;

UsageAlert := SUBSCRIBE SELECT * FROM UserTokens WHERE tokens > 100000;

This script defines a sequence of tables. We introduce := as syntactic sugar for the verbose CREATE TEMPORARY VIEW syntax.

The UserTokens table does not have a configured connector, which mean we treat it as an API mutation endpoint connected to Flink via a Kafka topic that captures the events. This makes it easy to build APIs that capture user activity, transactions, or other types of events.